Optimizing for Token Efficiency

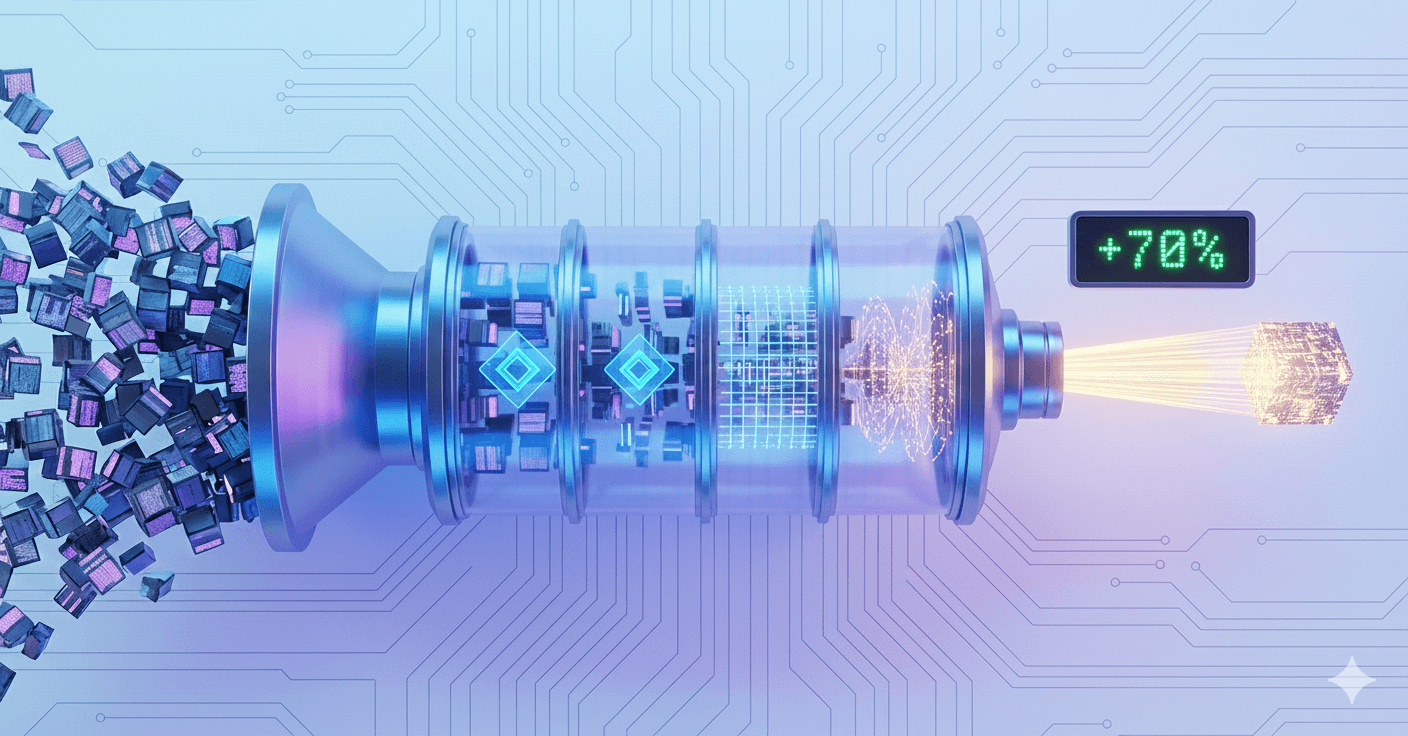

At CodeReviewr, we're building the most token-efficient code review tool on the market. Here's our roadmap to reducing token usage by 70% while maintaining expert-level reviews.

Optimizing for Token Efficiency

At CodeReviewr, our business model is simple: you pay for what you use, calculated by the token. Conventional business logic suggests we should encourage you to use more tokens.

However, we believe the opposite. Our goal isn't to maximize token volume; it's to maximize value. A tool that burns through your budget with redundant data isn't a reliable partner.

Here is how we are working to make CodeReviewr the most token-efficient developer tool on the market today.

Transparency First

We don't believe in "black box" billing. Every time our agent performs a review, we provide clear insights into your token consumption:

- Where tokens were used (System prompts vs. code context vs. output).

- Why they were used (Which files required the most context?).

- How you can optimize your own configurations to lean out the process.

By giving you the data, we empower you to see exactly what you’re paying for.

Roadmap to 70% Efficiency

We are obsessed with optimization. Over the next year, we are rolling out a series of updates designed to decrease token usage by as much as 70% while maintaining the expert-level code reviews you expect.

Here is the roadmap for how we’ll get there:

1. Intelligent Caching

Why pay to process the same "boilerplate" or library code twice? We are implementing sophisticated caching layers that recognize recurring context, ensuring you only pay for the new logic being introduced.

2. Codebase Indexing & Semantic Retrieval

Instead of dumping entire files into a prompt, we are building a specialized knowledgebase for your codebase. By indexing your project, our agent can "search" for the exact context it needs, retrieving only the relevant snippets rather than the entire directory. This also enables us to implement semantic pattern matching to avoid duplicate code reviews.

3. Expanded Agent Tooling

We are giving our agent better "eyes and ears." By providing it with tools to query specific functions or file structures on demand, it can find answers quickly and precisely, rather than requiring massive context windows to be passed upfront.

4. Diff Pre-processing

Code diffs are often "noisy." Our upcoming Diff Pre-processing engine will strip away irrelevant metadata and non-functional changes before they ever hit the LLM, significantly reducing input tokens.

5. Prompt Compression

We are applying advanced linguistic compression to our system prompts. By removing redundancy and using "token-dense" instructions, we can achieve the same logic with a fraction of the character count.

6. The Triage Model

Not every code change requires a "Frontier-class" model. We are developing a triage system that uses smaller, faster models to handle simple tasks (like syntax or style checks) and reserves the heavy-hitting, expensive models for complex architectural logic.

Looking Forward

Efficiency is a technical challenge we are excited to solve. As we roll out these features throughout the year, we will be publishing a series of technical deep-dives into each technique mentioned above.

We want CodeReviewr to be the smartest tool in your stack and the most responsible one for your bottom line.